Why Responsible AI Is More Than Just a Buzzword?

Artificial Intelligence (AI) has gone from science fiction to an essential part of everyday life. From personalized recommendations to autonomous systems, AI is transforming how we live and work. But with great power comes great responsibility—and in the case of AI, that means ensuring these systems operate ethically, transparently, and safely. Enter: Responsible AI.

Russell

3/31/20253 min read

What Is Responsible AI?

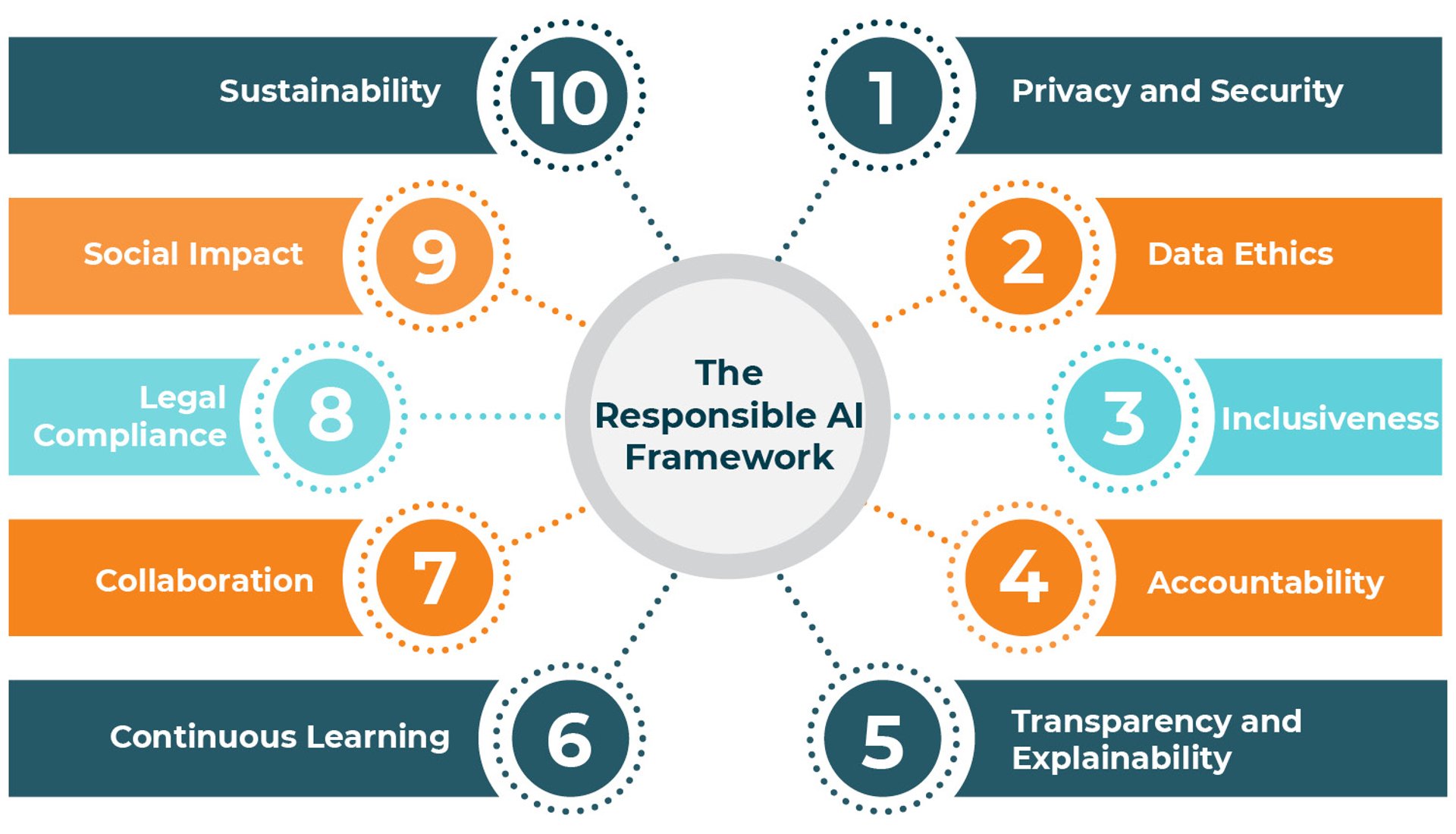

Responsible AI refers to the design, development, and deployment of AI systems in a way that aligns with ethical values, laws, and societal expectations. It's not just a set of ideals—it’s a framework built on practical principles like:

Fairness: Avoiding bias and ensuring equitable outcomes for all users.

Transparency: Making AI decisions understandable to humans.

Accountability: Defining clear responsibility for AI behavior and outcomes.

Security & Safety: Protecting systems from manipulation and ensuring they behave reliably.

Privacy: Safeguarding personal data and respecting consent.

Inclusivity: Designing with diverse users and impacts in mind.

Responsible AI helps organizations build trust, reduce risk, and enhance the value of AI initiatives—but achieving it takes intentional effort.

Why It Matters: The Stakes Are High

When AI goes wrong, the consequences can be serious:

Biased hiring algorithms that disadvantage women or minorities

Facial recognition systems that misidentify people of color

Predictive policing tools that reinforce systemic injustices

Medical AI systems trained on unrepresentative data, putting lives at risk

These issues aren’t hypothetical. They’re real, documented, and damaging—not just for the individuals affected but also for the companies and governments behind the tools.

A lack of responsibility in AI can lead to reputational damage, legal liability, and loss of customer trust. On the flip side, organizations that adopt Responsible AI practices are better positioned to innovate with confidence.

Real-World Lessons: Where AI Has Gone Wrong (and Right)

Google faced public backlash and internal conflict after firing its top ethical AI researchers in 2020 and 2021, raising questions about how seriously it took AI accountability.

The EU’s AI Act is one of the first sweeping regulatory efforts to classify AI systems by risk and enforce strict transparency and oversight measures.

Microsoft and OpenAI have increasingly embedded guardrails in their models—like content filters, refusal mechanisms, and human-in-the-loop features—to minimize harm and boost trust.

These examples highlight two facts: the public is watching, and regulation is coming fast. Responsible AI isn’t just a nice-to-have—it’s a business imperative.

The Challenges of Getting It Right

Building AI responsibly isn’t easy. Teams face challenges like:

Opaque Models: Many AI models, especially deep learning ones, are hard to interpret.

Bias in Data: AI systems often reflect the biases of the data they’re trained on.

Ethics vs. Profit: Fast delivery and cost savings sometimes outweigh caution.

Lack of Diverse Voices: Homogeneous teams may overlook risks to marginalized groups.

Tackling these issues requires a shift in mindset and organizational culture—AI isn't just a technical problem; it's a human one.

How to Start Practicing Responsible AI

Responsible AI doesn’t have to be overwhelming. Here’s how organizations can get started:

Establish ethical guidelines for AI development aligned with your mission and values.

Bias and fairness audits on models before deployment—and monitor them continuously.

Use model cards and data documentation to track assumptions, limitations, and risks.

Create cross-functional governance teams including legal, compliance, HR, and data science stakeholders.

Educate teams on ethical AI design—make it part of your product development lifecycle.

Tools & Frameworks to Help

You don’t have to start from scratch. There are open-source and enterprise-grade tools designed to help operationalize Responsible AI:

These tools support fairness testing, explainability, bias detection, and model transparency—critical components of any responsible AI workflow.

Final Thoughts: Trust Is the True ROI

The future of AI isn’t just about pushing the boundaries of what’s possible—it’s about building systems people can trust. Responsible AI is not a technical afterthought or a PR strategy. It’s a competitive advantage rooted in transparency, inclusivity, and accountability.

In a world where AI touches nearly every aspect of society, we must move beyond the hype and focus on doing it right. Because at the end of the day, the question isn’t “Can we build it?”—it’s “Should we, and how?”

Need Help With Responsible AI?

Whether you're just starting your AI journey or looking to formalize your AI/ML governance program, Cyberdiligent can help.

We work with organizations to:

Build practical, risk-based AI governance frameworks

Conduct AI bias, security, and compliance assessments

Design responsible AI policies aligned with global standards

Enable executive teams to make informed, ethical decisions about AI

At Cyberdiligent, we don’t just deliver services — we help you lead with certainty. Whether navigating evolving threats, regulatory complexity, or AI governance, our expert advisory gives you the clarity to act, the control to adapt, and the confidence to grow securely.

Let’s connect.

Reach out today to discover how we can partner to protect what matters most — and move your business forward with purpose and precision.

📩 Complete the form or email us directly. A member of our team will respond within one business day.